SPECTRA: Towards a New Framework that Accelerates Large Language Model Inference

Researchers from JAIST present a new speculative decoding framework that optimizes internal and external speculation and outperforms state-of-the-art decoding methods

Speculative decoding has emerged as a potential solution for speeding up inferences using large language models (LLMs). However, training-based speculative methods require the creation of draft models that typically lack generalizability, whereas training-free speculative frameworks offer only limited speed-up gains. SPECTRA, a novel speculative framework developed by researchers from JAIST, accelerates LLM inferences without additional training and surpasses existing speculative decoding methods across diverse functionalities, making it a practical tool for real-world LLM applications.

High-quality output at low latency is a critical requirement when using large language models (LLMs), especially in real-world scenarios, such as chatbots interacting with customers, or the AI code assistants used by millions of users daily. Currently, LLMs use a framework called autoregressive decoding, in which text is generated one token at a time, and the previous text is used to generate the next sequence. However, this is clearly inefficient since for longer sequences, the time to generate responses increases linearly.

To address this issue, researchers are widely exploring the use of speculative decoding that follows a "guess and verify" framework. In this approach, a specifically trained smaller LLM guesses multiple text tokens in advance, which is simultaneously verified by the original LLM, substantially reducing the response generation time. But these approaches require additional model training and extensive computational resources. While researchers have considered training-free speculative models in parallel, the speedup gain in these approaches remain limited owing to a reduced quality of their speculative guesses.

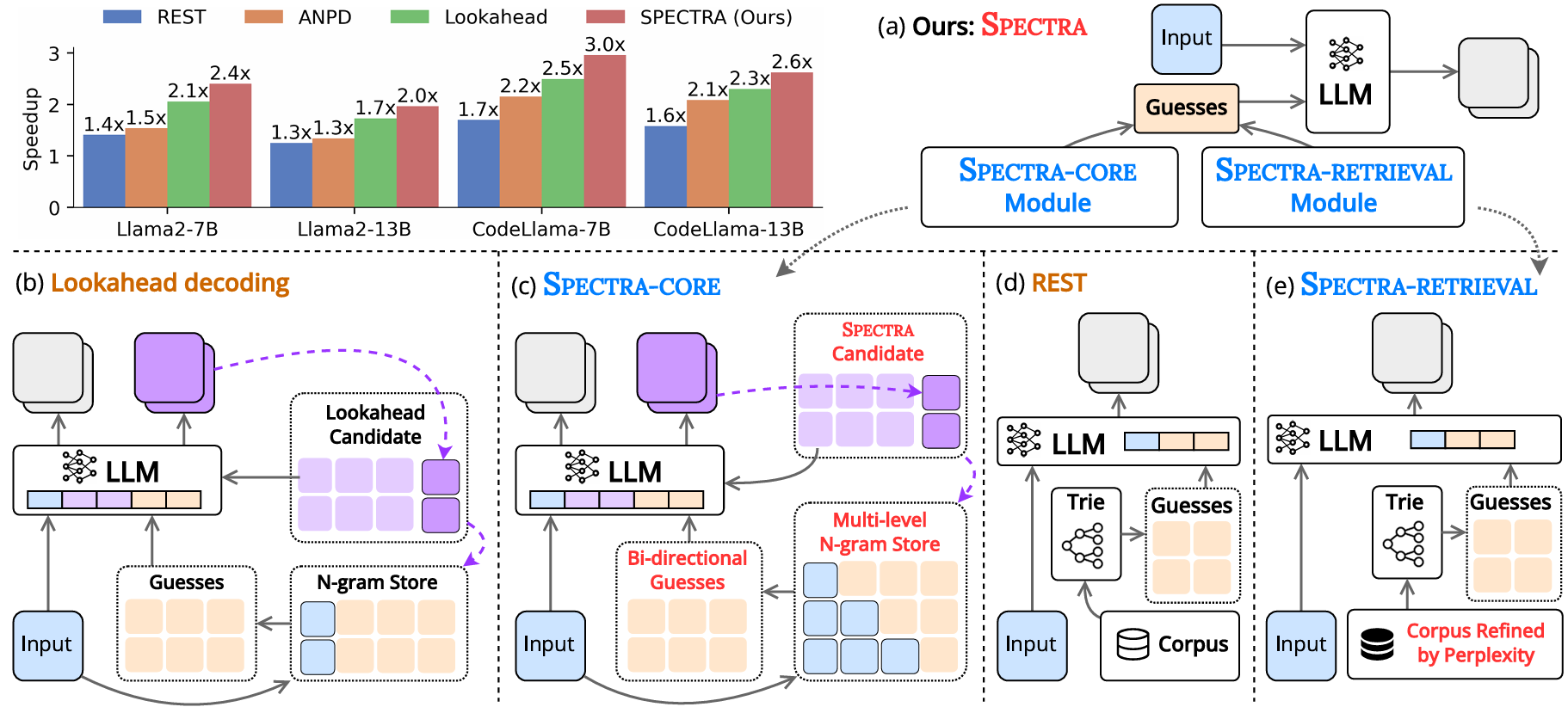

To address these gaps in the field, Professor Nguyen Le Minh and his doctoral students, Nguyen-Khang Le and Dinh-Truong Do, from the Japan Advanced Institute of Science and Technology (JAIST) recently developed a new speculative decoding framework called SPECTRA and demonstrated accelerated text generation speed without any need for additional training. "The framework consists of two main components: a core module (SPECTRA-CORE), which integrates seamlessly into LLMs in a plug-and-play manner, and an optional retrieval module (SPECTRA-RETRIEVAL) that further enhances performance," explains Prof. Nguyen. The team's findings were presented orally at The 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025) by Dinh-Truong Truong.

SPECTRA-CORE, the core module generates high-quality guesses by using the text distribution pattern predicted by the LLM, improving speculative decoding. In this smart system, dictionaries containing different sizes of word sequences (N-grams) can be searched bidirectionally (forward and backward) to predict word combinations, guessing phrases of varying lengths quickly and more accurately. Additionally, SPECTRA keeps optimizing the N-gram dictionaries by constantly updating them with new word combinations, ensuring robust text coverage.

To speed things up further, the retrieval module, SPECTRA-RETRIEVAL, is integrated into SPECTRA-CORE. Existing approaches that use external sources to retrieve information and generate guesses in speculative decoding often struggle to integrate with other decoding frameworks as the search time exceeds speedup outcomes. In contrast, SPECTRA-RETRIEVAL filters a large dataset of texts and keeps only the parts that are easy for the target LLM to predict based on perplexity scores. This, in turn, ensures that only high-quality, relevant data is used for training or fine-tuning the model, enabling seamless integration with SPECTRA-CORE.

In their study, the team tested SPECTRA on six tasks, including multi-turn conversations, code generation, and mathematical reasoning, across three LLM families -- Llama 2, Llama 3, and CodeLlama. SPECTRA achieved 4x speedup gains and was able to outperform state-of-the-art non-training speculative decoding methods, notably REST, ANPD, and Lookahead. While the overall model architecture and dataset characteristics determined the speedup gains of speculative decoding methods, SPECTRA showed reliability across a range of models and datasets, consistently accelerating speedup ratios.

"By integrating our plug and-play SPECTRA-CORE module-- which leverages multi-level N-gram storage and bidirectional search-- with the refined SPECTRA-RETRIEVAL module that selects high-quality external cues via perplexity-based filtering, we were able to achieve substantial speedups (up to 4.08×) across diverse tasks and model architectures while preserving the original model's output quality," highlights Prof. Nguyen.

Overall, by reducing the response generation time without needing to retrain LLMs, SPECTRA offers a practical solution for commercial and research systems that use LLMs and could very well lead to improved accessibility and sustainability of high-performance AIs in the long-term.

Image title: Schematic diagram of SPECTRA and other existing training-free approaches.

Image caption: This figure shows an overview of SPECTRA and compares its functionality with other training-free state-of-the-art approaches across a range of applications. SPECTRA comprises two main modules, namely a core component (SPECTRA-CORE) and an optional retrieval module (SPECTRA-RETRIEVAL) that enhances its performance. While SPECTRA-CORE module utilizes the knowledge inside LLM for obtaining guesses, SPECTRA-RETRIEVAL module is designed to source external guesses and can be integrated efficiently with SPECTRA-core to boost the speed of text generation.

Image credit: Nguyen Le Minh from JAIST

License type: Original Content

Usage restrictions: Cannot be reused without permission.

Reference

| Title of original paper: | SPECTRA: Faster Large Language Model Inference with Optimized Internal and External Speculation |

| Authors: | Nguyen-Khang Le*, Dinh-Truong Do*, Nguyen Le Minh |

| Conference: | Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025) |

| DOI: | 10.18653/v1/2025.acl-long.685 |

Funding information

This work was supported by JST SPRING (Support for Pioneering Research Initiated by the Next Generation), Japan, Grant Number JPMJSP2102.

August 7, 2025